Name | DisplayName | Description |

AD-Certificate | Active Directory Certificate Services | Active Directory Certificate Services (AD CS) is used to create certification authorities and related role services that allow you to issue and manage certificates used in a variety of applications. |

ADCS-Cert-Authority | Certification Authority | Certification Authority (CA) is used to issue and manage certificates. Multiple CAs can be linked to form a public key infrastructure. |

ADCS-Enroll-Web-Pol | Certificate Enrollment Policy Web Service | The Certificate Enrollment Policy Web Service enables users and computers to obtain certificate enrollment policy information even when the computer is not a member of a domain or if a domain-joined computer is temporarily outside the security boundary of the corporate network. The Certificate Enrollment Policy Web Service works with the Certificate Enrollment Web Service to provide policy-based automatic certificate enrollment for these users and computers. |

ADCS-Enroll-Web-Svc | Certificate Enrollment Web Service | The Certificate Enrollment Web Service enables users and computers to enroll for and renew certificates even when the computer is not a member of a domain or if a domain-joined computer is temporarily outside the security boundary of the computer network. The Certificate Enrollment Web Service works together with the Certificate Enrollment Policy Web Service to provide policy-based automatic certificate enrollment for these users and computers. |

ADCS-Web-Enrollment | Certification Authority Web Enrollment | Certification Authority Web Enrollment provides a simple Web interface that allows users to perform tasks such as request and renew certificates, retrieve certificate revocation lists (CRLs), and enroll for smart card certificates. |

ADCS-Device-Enrollment | Network Device Enrollment Service | Network Device Enrollment Service makes it possible to issue and manage certificates for routers and other network devices that do not have network accounts. |

ADCS-Online-Cert | Online Responder | Online Responder makes certificate revocation checking data accessible to clients in complex network environments. |

AD-Domain-Services | Active Directory Domain Services | Active Directory Domain Services (AD DS) stores information about objects on the network and makes this information available to users and network administrators. AD DS uses domain controllers to give network users access to permitted resources anywhere on the network through a single logon process. |

ADFS-Federation | Active Directory Federation Services | Active Directory Federation Services (AD FS) provides simplified, secured identity federation and Web single sign-on (SSO) capabilities. AD FS includes a Federation Service that enables browser-based Web SSO. |

ADLDS | Active Directory Lightweight Directory Services | Active Directory Lightweight Directory Services (AD LDS) provides a store for application-specific data, for directory-enabled applications that do not require the infrastructure of Active Directory Domain Services. Multiple instances of AD LDS can exist on a single server, each of which can have its own schema. |

ADRMS | Active Directory Rights Management Services | Active Directory Rights Management Services (AD RMS) helps you protect information from unauthorized use. AD RMS establishes the identity of users and provides authorized users with licenses for protected information. |

ADRMS-Server | Active Directory Rights Management Server | Active Directory Rights Management Services (AD RMS) helps you protect information from unauthorized use. AD RMS establishes the identity of users and provides authorized users with licenses for protected information. |

ADRMS-Identity | Identity Federation Support | Identity Federation Support leverages federated trust relationships between your organization and other organizations to establish user identities and provide access to protected information created by either organization. For example, a trust created with Active Directory Federation Services can be used to establish user identities for AD RMS. |

DHCP | DHCP Server | Dynamic Host Configuration Protocol (DHCP) Server enables you to centrally configure, manage, and provide temporary IP addresses and related information for client computers. |

DNS | DNS Server | Domain Name System (DNS) Server provides name resolution for TCP/IP networks. DNS Server is easier to manage when it is installed on the same server as Active Directory Domain Services. If you select the Active Directory Domain Services role, you can install and configure DNS Server and Active Directory Domain Services to work together. |

Fax | Fax Server | Fax Server sends and receives faxes and allows you to manage fax resources such as jobs, settings, reports, and fax devices on this computer or on the network. |

FileAndStorage-Services | File and Storage Services | File and Storage Services includes services that are always installed, as well as functionality that you can install to help manage file servers and storage. |

File-Services | File and iSCSI Services | File and iSCSI Services provides technologies that help you manage file servers and storage, reduce disk space utilization, replicate and cache files to branch offices, move or fail over a file share to another cluster node, and share files by using the NFS protocol. |

FS-FileServer | File Server | File Server manages shared folders and enables users to access files on this computer from the network. |

FS-BranchCache | BranchCache for Network Files | BranchCache for Network Files provides support for BranchCache on this file server. BranchCache is a wide area network (WAN) bandwidth optimization technology that caches content from your main office content servers at branch office locations, allowing client computers at branch offices to access the content locally rather than over the WAN. After you complete installation, you must share folders and enable hash generation for shared folders by using Group Policy or Local Computer Policy. |

FS-Data-Deduplication | Data Deduplication | Data Deduplication saves disk space by storing a single copy of identical data on the volume. |

FS-DFS-Namespace | DFS Namespaces | DFS Namespaces enables you to group shared folders located on different servers into one or more logically structured namespaces. Each namespace appears to users as a single shared folder with a series of subfolders. However, the underlying structure of the namespace can consist of numerous shared folders located on different servers and in multiple sites. |

FS-DFS-Replication | DFS Replication | DFS Replication is a multimaster replication engine that enables you to synchronize folders on multiple servers across local or wide area network (WAN) network connections. It uses the Remote Differential Compression (RDC) protocol to update only the portions of files that have changed since the last replication. DFS Replication can be used in conjunction with DFS Namespaces, or by itself. |

FS-Resource-Manager | File Server Resource Manager | File Server Resource Manager helps you manage and understand the files and folders on a file server by scheduling file management tasks and storage reports, classifying files and folders, configuring folder quotas, and defining file screening policies. |

FS-VSS-Agent | File Server VSS Agent Service | File Server VSS Agent Service enables you to perform volume shadow copies of applications that store data files on this file server. |

FS-iSCSITarget-Server | iSCSI Target Server | iSCSI Target Server provides services and management tools for iSCSI targets. |

iSCSITarget-VSS-VDS | iSCSI Target Storage Provider (VDS and VSS hardware providers) | iSCSI Target Storage Provider enables applications on a server that are connected to an iSCSI target to perform volume shadow copies of data on iSCSI virtual disks. It also enables you to manage iSCSI virtual disks by using older applications that require a Virtual Disk Service (VDS) hardware provider, such as the Diskraid command. |

FS-NFS-Service | Server for NFS | Server for NFS enables this computer to share files with UNIX-based computers and other computers that use the network file system (NFS) protocol. |

FS-SyncShareService | Work Folders | Work Folders provides a way to use work files from a variety of computers, including work and personal devices. You can use Work Folders to host user files and keep them synchronized - whether users access their files from inside the network or from across the Internet. |

Storage-Services | Storage Services | Storage Services provides storage management functionality that is always installed and cannot be removed. |

HostGuardianServiceRole | Host Guardian Service | The Host Guardian Service (HGS) server role provides the Attestation & Key Protection services that enable Guarded Hosts to run Shielded virtual machines. The Attestation service validates Guarded Host identity & configuration. The Key Protection service enables distributed access to encrypted transport keys to enable Guarded Hosts to unlock and run Shielded virtual machines. |

Hyper-V | Hyper-V | Hyper-V provides the services that you can use to create and manage virtual machines and their resources. Each virtual machine is a virtualized computer system that operates in an isolated execution environment. This allows you to run multiple operating systems simultaneously. |

MultiPointServerRole | MultiPoint Services | MultiPoint Services allows multiple users, each with their own independent and familiar Windows experience, to simultaneously share one computer. |

NetworkController | Network Controller | The Network Controller provides the point of automation needed for continual configuration, monitoring and diagnostics of virtual networks, physical networks, network services, network topology, address management, etc. within a datacenter stamp. |

NPAS | Network Policy and Access Services | Network Policy and Access Services provides Network Policy Server (NPS), which helps safeguard the security of your network. |

Print-Services | Print and Document Services | Print and Document Services enables you to centralize print server and network printer management tasks. With this role, you can also receive scanned documents from network scanners and route the documents to a shared network resource, Windows SharePoint Services site, or e-mail addresses. |

Print-Server | Print Server | Print Server includes the Print Management snap-in, which is used for managing multiple printers or print servers and migrating printers to and from other Windows print servers. |

Print-Scan-Server | Distributed Scan Server | Distributed Scan Server provides the service which receives scanned documents from network scanners and routes them to the correct destinations. It also includes the Scan Management snap-in, which you can use to manage network scanners and configure scan processes. |

Print-Internet | Internet Printing | Internet Printing creates a Web site where users can manage print jobs on the server. It also enables users who have Internet Printing Client installed to use a Web browser to connect and print to shared printers on this server by using the Internet Printing Protocol (IPP). |

Print-LPD-Service | LPD Service | Line Printer Daemon (LPD) Service enables UNIX-based computers or other computers using the Line Printer Remote (LPR) service to print to shared printers on this server. |

RemoteAccess | Remote Access | Remote Access provides seamless connectivity through DirectAccess, VPN, and Web Application Proxy. DirectAccess provides an Always On and Always Managed experience. RAS provides traditional VPN services, including site-to-site (branch-office or cloud-based) connectivity. Web Application Proxy enables the publishing of selected HTTP- and HTTPS-based applications from your corporate network to client devices outside of the corporate network. Routing provides traditional routing capabilities, including NAT and other connectivity options. RAS and Routing can be deployed in single-tenant or multi-tenant mode. |

DirectAccess-VPN | DirectAccess and VPN (RAS) | DirectAccess gives users the experience of being seamlessly connected to their corporate network any time they have Internet access. With DirectAccess, mobile computers can be managed any time the computer has Internet connectivity, ensuring mobile users stay up-to-date with security and system health policies. VPN uses the connectivity of the Internet plus a combination of tunnelling and data encryption technologies to connect remote clients and remote offices. |

Routing | Routing | Routing provides support for NAT Routers, LAN Routers running BGP, RIP, and multicast capable routers (IGMP Proxy). |

Web-Application-Proxy | Web Application Proxy | Web Application Proxy enables the publishing of selected HTTP- and HTTPS-based applications from your corporate network to client devices outside of the corporate network. It can use AD FS to ensure that users are authenticated before they gain access to published applications. Web Application Proxy also provides proxy functionality for your AD FS servers. |

Remote-Desktop-Services | Remote Desktop Services | Remote Desktop Services enables users to access virtual desktops, session-based desktops, and RemoteApp programs. Use the Remote Desktop Services installation to configure a Virtual machine-based or a Session-based desktop deployment. |

RDS-Connection-Broker | Remote Desktop Connection Broker | Remote Desktop Connection Broker (RD Connection Broker) allows users to reconnect to their existing virtual desktops, RemoteApp programs, and session-based desktops. It enables even load distribution across RD Session Host servers in a session collection or across pooled virtual desktops in a pooled virtual desktop collection, and provides access to virtual desktops in a virtual desktop collection. |

RDS-Gateway | Remote Desktop Gateway | Remote Desktop Gateway (RD Gateway) enables authorized users to connect to virtual desktops, RemoteApp programs, and session-based desktops on the corporate network or over the Internet. |

RDS-Licensing | Remote Desktop Licensing | Remote Desktop Licensing (RD Licensing) manages the licenses required to connect to a Remote Desktop Session Host server or a virtual desktop. You can use RD Licensing to install, issue, and track the availability of licenses. |

RDS-RD-Server | Remote Desktop Session Host | Remote Desktop Session Host (RD Session Host) enables a server to host RemoteApp programs or session-based desktops. Users can connect to RD Session Host servers in a session collection to run programs, save files, and use resources on those servers. Users can access an RD Session Host server by using the Remote Desktop Connection client or by using RemoteApp programs. |

RDS-Virtualization | Remote Desktop Virtualization Host | Remote Desktop Virtualization Host (RD Virtualization Host) enables users to connect to virtual desktops by using RemoteApp and Desktop Connection. |

RDS-Web-Access | Remote Desktop Web Access | Remote Desktop Web Access (RD Web Access) enables users to access RemoteApp and Desktop Connection through the Start menu or through a web browser. RemoteApp and Desktop Connection provides users with a customized view of RemoteApp programs, session-based desktops, and virtual desktops. |

VolumeActivation | Volume Activation Services | Volume Activation Services enables you to automate and simplify the management of Key Management Service (KMS) host keys and the volume key activation infrastructure for a network. With this service you can install and manage a KMS host, or configure Microsoft Active Directory-Based Activation to provide volume activation for domain-joined systems. |

Web-Server | Web Server (IIS) | Web Server (IIS) provides a reliable, manageable, and scalable Web application infrastructure. |

Web-WebServer | Web Server | Web Server provides support for HTML Web sites and optional support for ASP.NET, ASP, and Web server extensions. You can use the Web Server to host an internal or external Web site or to provide an environment for developers to create Web-based applications. |

Web-Common-Http | Common HTTP Features | Common HTTP Features supports basic HTTP functionality, such as delivering standard file formats and configuring custom server properties. Use Common HTTP Features to create custom error messages, to configure how the server responds to requests that do not specify a document, or to automatically redirect some requests to a different location. |

Web-Default-Doc | Default Document | Default Document lets you configure a default file for the Web server to return when users do not specify a file in a request URL. Default documents make it easier and more convenient for users to reach your Web site. |

Web-Dir-Browsing | Directory Browsing | Directory Browsing lets users to see the contents of a directory on your Web server. Use Directory Browsing to enable an automatically generated list of all directories and files available in a directory when users do not specify a file in a request URL and default documents are either disabled or not configured. |

Web-Http-Errors | HTTP Errors | HTTP Errors allows you to customize the error messages returned to users' browsers when the Web server detects a fault condition. Use HTTP errors to provide users with a better user experience when they run up against an error message. Consider providing users with an e-mail address for staff who can help them resolve the error. |

Web-Static-Content | Static Content | Static Content allows the Web server to publish static Web file formats, such as HTML pages and image files. Use Static Content to publish files on your Web server that users can then view using a Web browser. |

Web-Http-Redirect | HTTP Redirection | HTTP Redirection provides support to redirect user requests to a specific destination. Use HTTP redirection whenever you want customers who might use one URL to actually end up at another URL. This is helpful in many situations, from simply renaming your Web site, to overcoming a domain name that is difficult to spell, or forcing clients to use a secure channel. |

Web-DAV-Publishing | WebDAV Publishing | WebDAV Publishing (Web Distributed Authoring and Versioning) enables you to publish files to and from a Web server by using the HTTP protocol. Because WebDAV uses HTTP, it works through most firewalls without modification. |

Web-Health | Health and Diagnostics | Health and Diagnostics provides infrastructure to monitor, manage, and troubleshoot the health of Web servers, sites, and applications. |

Web-Http-Logging | HTTP Logging | HTTP Logging provides logging of Web site activity for this server. When a loggable event, usually an HTTP transaction, occurs, IIS calls the selected logging module, which then writes to one of the logs stored in the file system of the Web server. These logs are in addition to those provided by the operating system. |

Web-Custom-Logging | Custom Logging | Custom Logging provides support for logging Web server activity in a format that differs considerably from the manner in which IIS generates log files. Use custom to create your own logging module. Custom logging modules are added to IIS by registering a new COM component that implements ILogPlugin or ILogPluginEx. |

Web-Log-Libraries | Logging Tools | Logging Tools provides infrastructure to manage Web server logs and automate common logging tasks. |

Web-ODBC-Logging | ODBC Logging | ODBC Logging provides infrastructure that supports logging Web server activity to an ODBC-compliant database. With a logging database, you can programmatically display and manipulate data from the logging database on an HTML page. You might do this to search logs for specific events to call out user defined events that you want to monitor. |

Web-Request-Monitor | Request Monitor | Request Monitor provides infrastructure to monitor Web application health by capturing information about HTTP requests in an IIS worker process. Administrators and developers can use Request Monitor to understand which HTTP requests are executing in a worker process when the worker process has become unresponsive or very slow. |

Web-Http-Tracing | Tracing | Tracing provides infrastructure to diagnose and troubleshoot Web applications. With failed request tracing, you can troubleshoot difficult to capture events like poor performance, or authentication related failures. This feature buffers trace events for a request and only flushes them to disk if the request falls into a user-configured error condition. |

Web-Performance | Performance | Performance provides infrastructure for output caching by integrating the dynamic output-caching capabilities of ASP.NET with the static output-caching capabilities that were present in IIS 6.0. IIS also lets you use bandwidth more effectively and efficiently by using common compression mechanisms such as Gzip and Deflate. |

Web-Stat-Compression | Static Content Compression | Static Content Compression provides infrastructure to configure HTTP compression of static content. This allows more efficient use of bandwidth. Unlike dynamic responses, compressed static responses can be cached without degrading CPU resources. |

Web-Dyn-Compression | Dynamic Content Compression | Dynamic Content Compression provides infrastructure to configure HTTP compression of dynamic content. Enabling dynamic compression always gives you more efficient utilization of bandwidth, but if your server's processor utilization is already very high, the CPU load imposed by dynamic compression might make your site perform more slowly. |

Web-Security | Security | Security provides infrastructure for securing the Web server from users and requests. IIS supports multiple authentication methods. Pick an appropriate authentication scheme based upon the role of the server. Filter all incoming requests, rejecting without processing requests that match user defined values, or restrict requests based on originating address space. |

Web-Filtering | Request Filtering | Request Filtering screens all incoming requests to the server and filters these requests based on rules set by the administrator. Many malicious attacks share common characteristics, like extremely long requests, or requests for an unusual action. By filtering requests, you can attempt to mitigate the impact of these type attacks. |

Web-Basic-Auth | Basic Authentication | Basic authentication offers strong browser compatibility. Appropriate for small internal networks, this authentication method is rarely used on the public Internet. Its major disadvantage is that it transmits passwords across the network using an easily decrypted algorithm. If intercepted, these passwords are simple to decipher. Use SSL with Basic authentication. |

Web-CertProvider | Centralized SSL Certificate Support | Centralized SSL Certificate Support enables you to manage SSL server certificates centrally using a file share. Maintaining SSL server certificates on a file share simplifies management since there is one place to manage them. |

Web-Client-Auth | Client Certificate Mapping Authentication | Client Certificate Mapping Authentication uses client certificates to authenticate users. A client certificate is a digital ID from a trusted source. IIS offers two types of authentication using client certificate mapping. This type uses Active Directory to offer one-to-one certificate mappings across multiple Web servers. |

Web-Digest-Auth | Digest Authentication | Digest authentication works by sending a password hash to a Windows domain controller to authenticate users. When you need improved security over Basic authentication, consider using Digest authentication, especially if users who must be authenticated access your Web site from behind firewalls and proxy servers. |

Web-Cert-Auth | IIS Client Certificate Mapping Authentication | IIS Client Certificate Mapping Authentication uses client certificates to authenticate users. A client certificate is a digital ID from a trusted source. IIS offers two types of authentication using client certificate mapping. This type uses IIS to offer one-to-one or many-to-one certificate mapping. Native IIS mapping of certificates offers better performance. |

Web-IP-Security | IP and Domain Restrictions | IP and Domain Restrictions allow you to enable or deny content based upon the originating IP address or domain name of the request. Instead of using groups, roles, or NTFS file system permissions to control access to content, you can specific IP addresses or domain names. |

Web-Url-Auth | URL Authorization | URL Authorization allows you to create rules that restrict access to Web content. You can bind these rules to users, groups, or HTTP header verbs. By configuring URL authorization rules, you can prevent employees who are not members of certain groups from accessing content or interacting with Web pages. |

Web-Windows-Auth | Windows Authentication | Windows authentication is a low cost authentication solution for internal Web sites. This authentication scheme allows administrators in a Windows domain to take advantage of the domain infrastructure for authenticating users. Do not use Windows authentication if users who must be authenticated access your Web site from behind firewalls and proxy servers. |

Web-App-Dev | Application Development | Application Development provides infrastructure for developing and hosting Web applications. Use these features to create Web content or extend the functionality of IIS. These technologies typically provide a way to perform dynamic operations that result in the creation of HTML output, which IIS then sends to fulfill client requests. |

Web-Net-Ext | .NET Extensibility 3.5 | .NET extensibility allows managed code developers to change, add and extend web server functionality in the entire request pipeline, the configuration, and the UI. Developers can use the familiar ASP.NET extensibility model and rich .NET APIs to build Web server features that are just as powerful as those written using the native C++ APIs. |

Web-Net-Ext45 | .NET Extensibility 4.6 | .NET extensibility allows managed code developers to change, add and extend web server functionality in the entire request pipeline, the configuration, and the UI. Developers can use the familiar ASP.NET extensibility model and rich .NET APIs to build Web server features that are just as powerful as those written using the native C++ APIs. |

Web-AppInit | Application Initialization | Application Initialization perform expensive web application initialization tasks before serving web pages. |

Web-ASP | ASP | Active Server Pages (ASP) provides a server side scripting environment for building Web sites and Web applications. Offering improved performance over CGI scripts, ASP provides IIS with native support for both VBScript and JScript. Use ASP if you have existing applications that require ASP support. For new development, consider using ASP.NET. |

Web-Asp-Net | ASP.NET 3.5 | ASP.NET provides a server side object oriented programming environment for building Web sites and Web applications using managed code. ASP.NET is not simply a new version of ASP. Having been entirely re-architected to provide a highly productive programming experience based on the .NET Framework, ASP.NET provides a robust infrastructure for building web applications. |

Web-Asp-Net45 | ASP.NET 4.6 | ASP.NET provides a server side object oriented programming environment for building Web sites and Web applications using managed code. ASP.NET 4.6 is not simply a new version of ASP. Having been entirely re-architected to provide a highly productive programming experience based on the .NET Framework, ASP.NET provides a robust infrastructure for building web applications. |

Web-CGI | CGI | CGI defines how a Web server passes information to an external program. Typical uses might include using a Web form to collect information and then passing that information to a CGI script to be emailed somewhere else. Because CGI is a standard, CGI scripts can be written using a variety of programming languages. The downside to using CGI is the performance overhead. |

Web-ISAPI-Ext | ISAPI Extensions | Internet Server Application Programming Interface (ISAPI) Extensions provides support for dynamic Web content developing using ISAPI extensions. An ISAPI extension runs when requested just like any other static HTML file or dynamic ASP file. Since ISAPI applications are compiled code, they are processed much faster than ASP files or files that call COM+ components. |

Web-ISAPI-Filter | ISAPI Filters | Internet Server Application Programming Interface (ISAPI) Filters provides support for Web applications that use ISAPI filters. ISAPI filters are files that can extend or change the functionality provided by IIS. An ISAPI filter reviews every request made to the Web server, until the filter finds one that it needs to process. |

Web-Includes | Server Side Includes | Server Side Includes (SSI) is a scripting language used to dynamically generate HTML pages. The script runs on the server before the page is delivered to the client and typically involves inserting one file into another. You might create an HTML navigation menu and use SSI to dynamically add it to all pages on a Web site. |

Web-WebSockets | WebSocket Protocol | IIS 10.0 and ASP.NET 4.6 support writing server applications that communicate over the WebSocket Protocol. |

Web-Ftp-Server | FTP Server | FTP Server enables the transfer of files between a client and server by using the FTP protocol. Users can establish an FTP connection and transfer files by using an FTP client or FTP-enabled Web browser. |

Web-Ftp-Service | FTP Service | FTP Service enables FTP publishing on a Web server. |

Web-Ftp-Ext | FTP Extensibility | FTP Extensibility enables support for FTP extensibility features such as custom providers, ASP.NET users or IIS Manager users. |

Web-Mgmt-Tools | Management Tools | Management Tools provide infrastructure to manage a Web server that runs IIS 10. You can use the IIS user interface, command-line tools, and scripts to manage the Web server. You can also edit the configuration files directly. |

Web-Mgmt-Console | IIS Management Console | IIS Management Console provides infrastructure to manage IIS 10 by using a user interface. You can use the IIS management console to manage a local or remote Web server that runs IIS 10. To manage SMTP, you must install and use the IIS 6 Management Console. |

Web-Mgmt-Compat | IIS 6 Management Compatibility | IIS 6 Management Compatibility provides forward compatibility for your applications and scripts that use the two IIS APIs, Admin Base Object (ABO) and Active Directory Service Interface (ADSI). You can use existing IIS 6 scripts to manage the IIS 10 Web server. |

Web-Metabase | IIS 6 Metabase Compatibility | IIS 6 Metabase Compatibility provides infrastructure to query and configure the metabase so that you can run applications and scripts migrated from earlier versions of IIS that use Admin Base Object (ABO) or Active Directory Service Interface (ADSI) APIs. |

Web-Lgcy-Mgmt-Console | IIS 6 Management Console | IIS 6 Management Console provides infrastructure for administration of remote IIS 6.0 servers from this computer. |

Web-Lgcy-Scripting | IIS 6 Scripting Tools | IIS 6 Scripting Tools provide the ability to continue using IIS 6 scripting tools that you built to manage IIS 6 in IIS 10, especially if your applications and scripts that use ActiveX Data Objects (ADO) or Active Directory Service Interface (ADSI) APIs. IIS 6 Scripting Tools require Windows Process Activation Service Configuration API. |

Web-WMI | IIS 6 WMI Compatibility | IIS 6 WMI Compatibility provides Windows Management Instrumentation (WMI) scripting interfaces to programmatically manage and automate tasks for IIS 10.0 Web server, from a set of scripts that you created in the WMI provider. This service includes the WMI CIM Studio, WMI Event Registration, WMI Event Viewer, and WMI Object Browser tools to manage sites. |

Web-Scripting-Tools | IIS Management Scripts and Tools | IIS Management Scripts and Tools provide infrastructure to programmatically manage an IIS 10 Web server by using commands in a command window or by running scripts. You can use these tools when you want to automate commands in batch files or when you do not want to incur the overhead of managing IIS by using the user interface. |

Web-Mgmt-Service | Management Service | Management Service allows the Web server to be managed remotely from another computer using IIS Manager. |

WDS | Windows Deployment Services | Windows Deployment Services provides a simplified, secure means of rapidly and remotely deploying Windows operating systems to computers over the network. |

WDS-Deployment | Deployment Server | Deployment Server provides the full functionality of Windows Deployment Services, which you can use to configure and remotely install Windows operating systems. With Windows Deployment Services, you can create and customize images and then use them to reimage computers. Deployment Server is dependent on the core parts of Transport Server. |

WDS-Transport | Transport Server | Transport Server provides a subset of the functionality of Windows Deployment Services. It contains only the core networking parts, which you can use to transmit data using multicasting on a stand-alone server. You should use this role service if you want to transmit data using multicasting, but do not want to incorporate all of Windows Deployment Services. |

ServerEssentialsRole | Windows Server Essentials Experience | Windows Server Essentials Experience sets up the IT infrastructure and provides powerful functions such as PC backups that helps protect data, and Remote Web Access that helps access business information from virtually anywhere. Windows Server Essentials also helps you to easily and quickly connect to cloud-based applications and services to extend the functionality of your server. |

UpdateServices | Windows Server Update Services | Windows Server Update Services allows network administrators to specify the Microsoft updates that should be installed, create separate groups of computers for different sets of updates, and get reports on the compliance levels of the computers and the updates that must be installed. |

UpdateServices-WidDB | WID Connectivity | Installs the database used by WSUS into WID. |

UpdateServices-Services | WSUS Services | Installs the services used by Windows Server Update Services: Update Service, the Reporting Web Service, the API Remoting Web Service, the Client Web Service, the Simple Web Authentication Web Service, the Server Synchronization Service, and the DSS Authentication Web Service. |

UpdateServices-DB | SQL Server Connectivity | Installs the feature that enables WSUS to connect to a Microsoft SQL Server database. |

NET-Framework-Features | .NET Framework 3.5 Features | .NET Framework 3.5 combines the power of the .NET Framework 2.0 APIs with new technologies for building applications that offer appealing user interfaces, protect your customers' personal identity information, enable seamless and secure communication, and provide the ability to model a range of business processes. |

NET-Framework-Core | .NET Framework 3.5 (includes .NET 2.0 and 3.0) | .NET Framework 3.5 combines the power of the .NET Framework 2.0 APIs with new technologies for building applications that offer appealing user interfaces, protect your customers' personal identity information, enable seamless and secure communication, and provide the ability to model a range of business processes. |

NET-HTTP-Activation | HTTP Activation | HTTP Activation supports process activation via HTTP. Applications that use HTTP Activation can start and stop dynamically in response to work items that arrive over the network via HTTP. |

NET-Non-HTTP-Activ | Non-HTTP Activation | Non-HTTP Activation supports process activation via Message Queuing, TCP and named pipes. Applications that use Non-HTTP Activation can start and stop dynamically in response to work items that arrive over the network via Message Queuing, TCP and named pipes. |

NET-Framework-45-Features | .NET Framework 4.6 Features | .NET Framework 4.6 provides a comprehensive and consistent programming model for quickly and easily building and running applications that are built for various platforms including desktop PCs, Servers, smart phones and the public and private cloud. |

NET-Framework-45-Core | .NET Framework 4.6 | .NET Framework 4.6 provides a comprehensive and consistent programming model for quickly and easily building and running applications that are built for various platforms including desktop PCs, Servers, smart phones and the public and private cloud. |

NET-Framework-45-ASPNET | ASP.NET 4.6 | ASP.NET 4.6 provides core support for running ASP.NET 4.6 stand-alone applications as well as applications that are integrated with IIS. |

NET-WCF-Services45 | WCF Services | Windows Communication Foundation (WCF) Activation uses Windows Process Activation Service to invoke applications remotely over the network by using protocols such as HTTP, Message Queuing, TCP, and named pipes. Consequently, applications can start and stop dynamically in response to incoming work items, resulting in application hosting that is more robust, manageable, and efficient. |

NET-WCF-HTTP-Activation45 | HTTP Activation | HTTP Activation supports process activation via HTTP. Applications that use HTTP Activation can start and stop dynamically in response to work items that arrive over the network via HTTP. |

NET-WCF-MSMQ-Activation45 | Message Queuing (MSMQ) Activation | Message Queuing Activation supports process activation via Message Queuing. Applications that use Message Queuing Activation can start and stop dynamically in response to work items that arrive over the network via Message Queuing. |

NET-WCF-Pipe-Activation45 | Named Pipe Activation | Named Pipes Activation supports process activation via named pipes. Applications that use Named Pipes Activation can start and stop dynamically in response to work items that arrive over the network via named pipes. |

NET-WCF-TCP-Activation45 | TCP Activation | TCP Activation supports process activation via TCP. Applications that use TCP Activation can start and stop dynamically in response to work items that arrive over the network via TCP. |

NET-WCF-TCP-PortSharing45 | TCP Port Sharing | TCP Port Sharing allows multiple net.tcp applications to share a single TCP port. Consequently, these applications can coexist on the same physical computer in separate, isolated processes, while sharing the network infrastructure required to send and receive traffic over a TCP port, such as port 808. |

BITS | Background Intelligent Transfer Service (BITS) | Background Intelligent Transfer Service (BITS) asynchronously transfers files in the foreground or background, controls the flow of the transfers to preserve the responsiveness of other network applications, and automatically resumes file transfers after disconnecting from the network or restarting the computer. |

BITS-IIS-Ext | IIS Server Extension | IIS Server Extension allows a computer to receive files uploaded by clients that implement the BITS upload protocol. |

BITS-Compact-Server | Compact Server | BITS Compact Server is a stand-alone HTTPS file server that lets you transfer a limited number of large files asynchronously between computers in the same domain or mutually-trusted domains. |

BitLocker | BitLocker Drive Encryption | BitLocker Drive Encryption helps to protect data on lost, stolen, or inappropriately decommissioned computers by encrypting the entire volume and checking the integrity of early boot components. Data is only decrypted if those components are successfully verified and the encrypted drive is located in the original computer. Integrity checking requires a compatible Trusted Platform Module (TPM). |

BitLocker-NetworkUnlock | BitLocker Network Unlock | BitLocker Network Unlock enables a network-based key protector to be used to automatically unlock BitLocker-protected operating system drives in domain-joined computers when the computer is restarted. This is beneficial if you are doing maintenance operations on computers during non-working hours that require the computer to be restarted to complete the operation. |

BranchCache | BranchCache | <a href="http://go.microsoft.com/fwlink/?LinkId=244672">BranchCache</a> installs the services required to configure this computer as either a hosted cache server or a BranchCache-enabled content server. If you are deploying a content server, it must also be configured as either a Hypertext Transfer Protocol (HTTP) web server or a Background Intelligent Transfer Service (BITS)-based application server. To deploy a BranchCache-enabled file server, use the Add Roles Wizard to install the File Services server role with the File Server and BranchCache for network files role services. |

Canary-Network-Diagnostics | Canary Network Diagnostics | Canary network diagnostics enables validation of the physical network. |

NFS-Client | Client for NFS | Client for NFS enables this computer to access files on UNIX-based NFS servers. When installed, you can configure a computer to connect to UNIX NFS shares that allow anonymous access. |

Data-Center-Bridging | Data Center Bridging | Data Center Bridging (DCB) is a suite of IEEE standards that are used to enhance Ethernet local area networks by providing hardware-based bandwidth guarantees and transport reliability. Use DCB to help enforce bandwidth allocation on a Converged Network Adapter for offloaded storage traffic such as Internet Small Computer System Interface, RDMA over Converged Ethernet, and Fibre Channel over Ethernet. |

Direct-Play | Direct Play | Direct Play component. |

EnhancedStorage | Enhanced Storage | Enhanced Storage enables support for accessing additional functions made available by Enhanced Storage devices. Enhanced Storage devices have built-in safety features that let you control who can access the data on the device. |

Failover-Clustering | Failover Clustering | Failover Clustering allows multiple servers to work together to provide high availability of server roles. Failover Clustering is often used for File Services, virtual machines, database applications, and mail applications. |

GPMC | Group Policy Management | Group Policy Management is a scriptable Microsoft Management Console (MMC) snap-in, providing a single administrative tool for managing Group Policy across the enterprise. Group Policy Management is the standard tool for managing Group Policy. |

HostGuardian | Host Guardian Hyper-V Support | Host Guardian provides the features necessary on a Hyper-V server to provision Shielded Virtual Machines. |

Web-WHC | IIS Hostable Web Core | IIS Hostable Web Core enables you to write custom code that will host core IIS functionality in your own application. HWC enables your application to serve HTTP requests and use its own applicationHost.config and root web.config configuration files. The HWC application extension is contained in the hwebcore.dll file. |

InkAndHandwritingServices | Ink and Handwriting Services | Ink and Handwriting Services includes Ink Support and Handwriting Recognition. Ink Support provides pen/stylus support, including pen flicks support and APIs for calling handwriting recognition. Handwriting Recognition provides handwriting recognizers for a number of languages. After you install it, these components can be called by an application through the handwriting recognition APIs. |

Internet-Print-Client | Internet Printing Client | Internet Printing Client enables clients to use Internet Printing Protocol (IPP) to connect and print to printers on the network or Internet. |

IPAM | IP Address Management (IPAM) Server | IP Address Management (IPAM) Server provides a central framework for managing your IP address space and corresponding infrastructure servers such as DHCP and DNS. IPAM supports automated discovery of infrastructure servers in an Active Directory forest. IPAM allows you to manage your dynamic and static IPv4 and IPv6 address space, tracks IP address utilization trends, and supports monitoring and management of DNS and DHCP services on your network. |

ISNS | iSNS Server service | Internet Storage Name Server (iSNS) provides discovery services for Internet Small Computer System Interface (iSCSI) storage area networks. iSNS processes registration requests, deregistration requests, and queries from iSNS clients. |

Isolated-UserMode | Isolated User Mode | Isolated User Mode enables Virtualization Based Security on the system. |

LPR-Port-Monitor | LPR Port Monitor | Line Printer Remote (LPR) Port Monitor enables the computer to print to printers that are shared using any Line Printer Daemon (LPD) service. (LPD service is commonly used by UNIX-based computers and printer-sharing devices.) |

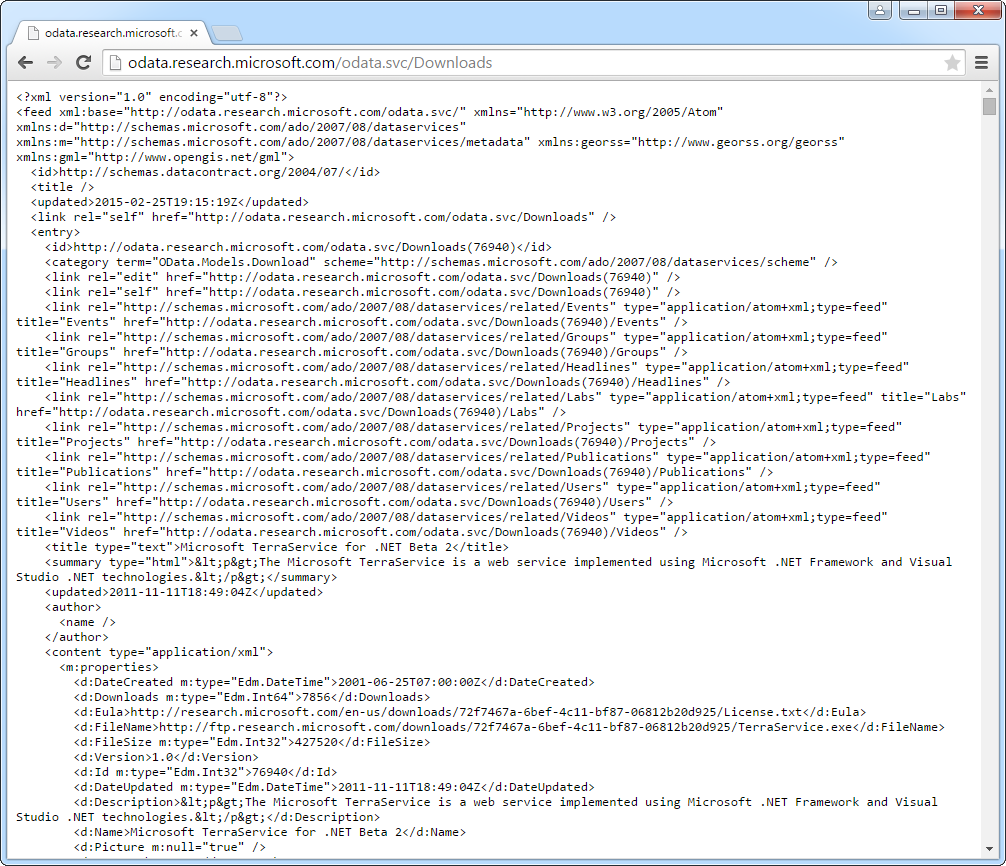

ManagementOdata | Management OData IIS Extension | Management OData IIS Extension is a framework for easily exposing Windows PowerShell cmdlets through an ODATA-based web service running under IIS. After enabling this feature, the user must provide a schema file (which contains definitions of the resources to be exposed) and an implementation of callback interfaces to make the web service functional. |

Server-Media-Foundation | Media Foundation | Media Foundation, which includes Windows Media Foundation, the Windows Media Format SDK, and a server subset of DirectShow, provides the infrastructure required for applications and services to transcode, analyze, and generate thumbnails for media files. Media Foundation is required by the Desktop Experience. |

MSMQ | Message Queuing | Message Queuing provides guaranteed message delivery, efficient routing, security, and priority-based messaging between applications. Message Queuing also accommodates message delivery between applications that run on different operating systems, use dissimilar network infrastructures, are temporarily offline, or that are running at different times. |

MSMQ-Services | Message Queuing Services | Message Queuing Services provides guaranteed message delivery, efficient routing, security, and priority-based messaging between applications. Message Queuing also accommodates message delivery between applications that run on different operating systems, use dissimilar network infrastructures, are temporarily offline, or that are running at different times. |

MSMQ-Server | Message Queuing Server | Message Queuing Server provides guaranteed message delivery, efficient routing, security, and priority-based messaging. It can be used to implement solutions for both asynchronous and synchronous messaging scenarios. |

MSMQ-Directory | Directory Service Integration | Directory Service Integration enables publishing of queue properties to the directory, authentication and encryption of messages using certificates registered in the directory, and routing of messages across directory sites. |

MSMQ-HTTP-Support | HTTP Support | HTTP Support enables the sending of messages over HTTP. |

MSMQ-Triggers | Message Queuing Triggers | Message Queuing Triggers enables the invocation of a COM component or an executable depending on the filters that you define for the incoming messages in a given queue. |

MSMQ-Multicasting | Multicasting Support | Multicasting Support enables queuing and sending of multicast messages to a multicast IP address. |

MSMQ-Routing | Routing Service | Routing Service routes messages between different sites and within a site. |

MSMQ-DCOM | Message Queuing DCOM Proxy | Message Queuing DCOM Proxy enables this computer to act as a DCOM client of a remote Message Queuing server. |

Multipath-IO | Multipath I/O | Multipath I/O, along with the Microsoft Device Specific Module (DSM) or a third-party DSM, provides support for using multiple data paths to a storage device on Windows. |

MultiPoint-Connector-Feature | MultiPoint Connector | MultiPoint Connector enables your machine to be monitored and managed by the MultiPoint Manager and Dashboard apps. |

NLB | Network Load Balancing | Network Load Balancing (NLB) distributes traffic across several servers, using the TCP/IP networking protocol. NLB is particularly useful for ensuring that stateless applications, such as Web servers running Internet Information Services (IIS), are scalable by adding additional servers as the load increases. |

PNRP | Peer Name Resolution Protocol | Peer Name Resolution Protocol allows applications to register and resolve names on your computer so that other computers can communicate with these applications. |

qWave | Quality Windows Audio Video Experience | Quality Windows Audio Video Experience (qWave) is a networking platform for audio video (AV) streaming applications on IP home networks. qWave enhances AV streaming performance and reliability by ensuring network quality-of-service (QoS) for AV applications. It provides mechanisms for admission control, run time monitoring and enforcement, application feedback, and traffic prioritization. On Windows Server platforms, qWave provides only rate-of-flow and prioritization services. |

CMAK | RAS Connection Manager Administration Kit (CMAK) | Create profiles for connecting to remote servers and networks. |

Remote-Assistance | Remote Assistance | Remote Assistance enables you (or a support person) to help users with PC issues or questions. You can view and get control of the user's desktop to troubleshoot and fix problems. Users can also ask for help from friends or co-workers. |

RDC | Remote Differential Compression | Remote Differential Compression computes and transfers the differences between two objects over a network using minimal bandwidth. |

RSAT | Remote Server Administration Tools | Remote Server Administration Tools includes snap-ins and command-line tools for remotely managing roles and features. |

RSAT-Feature-Tools | Feature Administration Tools | Feature Administration Tools includes snap-ins and command-line tools for remotely managing features. |

RSAT-SMTP | SMTP Server Tools | |

RSAT-Feature-Tools-BitLocker | BitLocker Drive Encryption Administration Utilities | BitLocker Drive Encryption Administration Utilities include snap-ins and command-line tools for managing BitLocker Drive Encryption features. |

RSAT-Feature-Tools-BitLocker-RemoteAdminTool | BitLocker Drive Encryption Tools | BitLocker Drive Encryption Tools include the command line tools manage-bde and repair-bde and the BitLocker cmdlets for Windows PowerShell. |

RSAT-Feature-Tools-BitLocker-BdeAducExt | BitLocker Recovery Password Viewer | BitLocker Recovery Password Viewer helps locate BitLocker Drive Encryption recovery passwords for Windows-based computers in Active Directory Domain Services (AD DS). |

RSAT-Bits-Server | BITS Server Extensions Tools | BITS Server Extensions Tools includes the Internet Information Services (IIS) 6.0 Manager and IIS Manager snap-ins. |

RSAT-Clustering | Failover Clustering Tools | Failover Clustering Tools include the Failover Cluster Manager snap-in, the Cluster-Aware Updating interface, and the Failover Cluster module for Windows PowerShell. Additional tools are the Failover Cluster Automation Server and the Failover Cluster Command Interface. |

RSAT-Clustering-Mgmt | Failover Cluster Management Tools | Failover Cluster Management Tools include the Failover Cluster Manager snap-in and the Cluster-Aware Updating interface. |

RSAT-Clustering-PowerShell | Failover Cluster Module for Windows PowerShell | The Failover Cluster Module for Windows PowerShell includes Windows PowerShell cmdlets for managing failover clusters. It also includes the Cluster-Aware Updating module for Windows PowerShell, for installing software updates on failover clusters. |

RSAT-Clustering-AutomationServer | Failover Cluster Automation Server | Failover Cluster Automation Server is the deprecated Component Object Model (COM) programmatic interface, MSClus. |

RSAT-Clustering-CmdInterface | Failover Cluster Command Interface | Failover Cluster Command Interface is the deprecated cluster.exe command-line tool for Failover Clustering. This tool has been replaced by the Failover Clustering module for Windows PowerShell. |

IPAM-Client-Feature | IP Address Management (IPAM) Client | IP Address Management (IPAM) Client is used to connect to and manage a local or remote IPAM server. IPAM provides a central framework for managing IP address space and corresponding infrastructure servers such as DHCP and DNS in an Active Directory forest. |

RSAT-NLB | Network Load Balancing Tools | Network Load Balancing Tools includes the Network Load Balancing Manager snap-in, the Network Load Balancing module for Windows PowerShell, and the nlb.exe and wlbs.exe command-line tools. |

RSAT-Shielded-VM-Tools | Shielded VM Tools | Shielded VM Tools includes the Provisioning Data File Wizard and the Template Disk Wizard. |

RSAT-SNMP | SNMP Tools | Simple Network Management Protocol (SNMP) Tools includes tools for managing SNMP. |

RSAT-Storage-Replica | Storage Replica Management Tools | Storage Replica Management Tools includes the CIM provider and the Storage Replica module for Windows PowerShell. |

RSAT-WINS | WINS Server Tools | WINS Server Tools includes the WINS Manager snap-in and command-line tool for managing the WINS Server. |

RSAT-Role-Tools | Role Administration Tools | Role Administration Tools includes snap-ins and command-line tools for remotely managing roles. |

RSAT-AD-Tools | AD DS and AD LDS Tools | Active Directory Domain Services (AD DS) and Active Directory Lightweight Directory Services (AD LDS) Tools includes snap-ins and command-line tools for remotely managing AD DS and AD LDS. |

RSAT-AD-PowerShell | Active Directory module for Windows PowerShell | The Active Directory module for Windows PowerShel and the tools it provides can be used by Active Directory administrators to manage Active Directory Domain Services (AD DS) at the command line. |

RSAT-ADDS | AD DS Tools | Active Directory Domain Services (AD DS) Tools includes snap-ins and command-line tools for remotely managing AD DS. |

RSAT-AD-AdminCenter | Active Directory Administrative Center | Active Directory Administrative Center provides users and network administrators with an enhanced Active Directory data management experience and a rich graphical user interface (GUI) to perform common Active Directory object management tasks. |

RSAT-ADDS-Tools | AD DS Snap-Ins and Command-Line Tools | Active Directory Domain Services Snap-Ins and Command-Line Tools includes Active Directory Users and Computers, Active Directory Domains and Trusts, Active Directory Sites and Services, and other snap-ins and command-line tools for remotely managing Active Directory domain controllers. |

RSAT-ADLDS | AD LDS Snap-Ins and Command-Line Tools | Active Directory Lightweight Directory Services (AD LDS) Snap-Ins and Command-Line Tools includes Active Directory Sites and Services, ADSI Edit, Schema Manager, and other snap-ins and command-line tools for managing AD LDS. |

RSAT-Hyper-V-Tools | Hyper-V Management Tools | Hyper-V Management Tools includes GUI and command-line tools for managing Hyper-V. |

Hyper-V-Tools | Hyper-V GUI Management Tools | Hyper-V GUI Management Tools includes the Hyper-V Manager snap-in and Virtual Machine Connection tool. |

Hyper-V-PowerShell | Hyper-V Module for Windows PowerShell | Hyper-V Module for Windows PowerShell includes Windows PowerShell cmdlets for managing Hyper-V. |

RSAT-RDS-Tools | Remote Desktop Services Tools | Remote Desktop Services Tools includes the snap-ins for managing Remote Desktop Services. |

RSAT-RDS-Gateway | Remote Desktop Gateway Tools | Remote Desktop Gateway Tools helps you manage and monitor RD Gateway server status and events. By using Remote Desktop Gateway Manager, you can specify events (such as unsuccessful connection attempts to the RD Gateway server) that you want to monitor for auditing purposes. |

RSAT-RDS-Licensing-Diagnosis-UI | Remote Desktop Licensing Diagnoser Tools | Remote Desktop Licensing Diagnoser Tools helps you determine which license servers the RD Session Host server or RD Virtualization Host server is configured to use, and whether those license servers have licenses available to issue to users or computing devices that are connecting to the servers. |

RDS-Licensing-UI | Remote Desktop Licensing Tools | Remote Desktop Licensing Tools helps you manage the licenses required to connect to a Remote Desktop Session Host server or a virtual desktop. You can use RD Licensing to install, issue, and track the availability of licenses. |

UpdateServices-RSAT | Windows Server Update Services Tools | Windows Server Update Services Tools includes graphical and Powershell tools for managing WSUS. |

UpdateServices-API | API and PowerShell cmdlets | Installs the .NET API and PowerShell cmdlets for remote management, automated task creation, and managing WSUS from the command line. |

UpdateServices-UI | User Interface Management Console | Installs the WSUS Management Console user interface (UI). |

RSAT-ADCS | Active Directory Certificate Services Tools | Active Directory Certificate Services Tools includes the Certification Authority, Certificate Templates, Enterprise PKI, and Online Responder Management snap-ins. |

RSAT-ADCS-Mgmt | Certification Authority Management Tools | Active Directory Certification Authority Management Tools includes the Certification Authority, Certificate Templates, and Enterprise PKI snap-ins. |

RSAT-Online-Responder | Online Responder Tools | Online Responder Tools includes the Online Responder Management snap-in. |

RSAT-ADRMS | Active Directory Rights Management Services Tools | Active Directory Rights Management Services Tools includes the Active Directory Rights Management Services snap-in. |

RSAT-DHCP | DHCP Server Tools | DHCP Server Tools includes the DHCP MMC snap-in, DHCP server netsh context and Windows PowerShell module for DHCP Server. |

RSAT-DNS-Server | DNS Server Tools | DNS Server Tools includes the DNS Manager snap-in, dnscmd.exe command-line tool and Windows PowerShell module for DNS Server. |

RSAT-Fax | Fax Server Tools | Fax Server Tools includes the Fax Service Manager snap-in. |

RSAT-File-Services | File Services Tools | File Services Tools includes snap-ins and command-line tools for remotely managing File Services. |

RSAT-DFS-Mgmt-Con | DFS Management Tools | Includes the DFS Management snap-in, DFS Replication service, DFS Namespaces PowerShell commands, and the dfsutil, dfscmd, dfsdiag, dfsradmin, and dfsrdiag commands. |

RSAT-FSRM-Mgmt | File Server Resource Manager Tools | Includes the File Server Resource Manager snap-in and the dirquota, filescrn, and storrept commands. |

RSAT-NFS-Admin | Services for Network File System Management Tools | Includes the Network File System snap-in and the nfsadmin, showmount, and rpcinfo commands. |

RSAT-CoreFile-Mgmt | Share and Storage Management Tool | Includes the Share and Storage Management snap-in, which lets you create and modify network shares and manage the physical disks on a server. |

RSAT-NetworkController | Network Controller Management Tools | Network Controller Management Tools includes Powershell tools for managing the Network Controller Role |

RSAT-NPAS | Network Policy and Access Services Tools | Network Policy and Access Services Tools includes the Network Policy Server snap-in. |

RSAT-Print-Services | Print and Document Services Tools | Print and Document Services Tools includes the Print Management snap-in. |

RSAT-RemoteAccess | Remote Access Management Tools | Remote Access Management Tools includes graphical and PowerShell tools for managing Remote Access. |

RSAT-RemoteAccess-Mgmt | Remote Access GUI and Command-Line Tools | Includes the Remote Access GUI and Command-Line Tools. Remote Access administrators can use the tools to manage Remote Access. |

RSAT-RemoteAccess-PowerShell | Remote Access module for Windows PowerShell | Includes the Remote Access provider and cmdlets. Remote Access administrators can use the Windows PowerShell environment and the tools it provides to manage Remote Access at the command line. |

RSAT-VA-Tools | Volume Activation Tools | Volume Activation Tools console can be used to manage volume activation license keys on a Key Management Service (KMS) host or in Active Directory Domain Services. You can use the Volume Activation Tools to install, activate, and manage one or more volume activation license keys, and to configure KMS settings. |

WDS-AdminPack | Windows Deployment Services Tools | <a href="http://go.microsoft.com/fwlink/?LinkId=294848">Windows Deployment Services Tools</a> includes the Windows Deployment Services snap-in, wdsutil.exe command-line tool, and Remote Install extension for the Active Directory Users and Computers snap-in. |

RSAT-HostGuardianService | Windows Server Host Guardian Service Tools | Windows Server Host Guardian Service Tools include the Remote Attestation Service module and the Key Protection Service module for Windows PowerShell. |

RPC-over-HTTP-Proxy | RPC over HTTP Proxy | Remote Procedure Call (RPC) over HTTP Proxy relays RPC traffic from client applications over HTTP to the server as an alternative to clients accessing the server over a VPN connection. |

Setup-and-Boot-Event-Collection | Setup and Boot Event Collection | This feature enables the collection and logging of setup and boot events from other computers on this network. |

Simple-TCPIP | Simple TCP/IP Services | Simple TCP/IP Services supports the following TCP/IP services: Character Generator, Daytime, Discard, Echo and Quote of the Day. Simple TCP/IP Services is provided for backward compatibility and should not be installed unless it is required. |

FS-SMB1 | SMB 1.0/CIFS File Sharing Support | Support for the SMB 1.0/CIFS file sharing protocol, and the Computer Browser protocol. |

FS-SMBBW | SMB Bandwidth Limit | SMB Bandwidth Limit provides a mechanism to track SMB traffic per category (Default, Hyper-V or Live Migration) and allows you to limit the amount of traffic allowed for a given category. It is commonly used to limit the bandwidth used by Live Migration over SMB. |

SMTP-Server | SMTP Server | |

SNMP-Service | SNMP Service | Simple Network Management Protocol (SNMP) Service includes agents that monitor the activity in network devices and report to the network console workstation. |

SNMP-WMI-Provider | SNMP WMI Provider | SNMP Windows Management Instrumentation (WMI) Provider enables WMI client scripts and applications to access SNMP information. Clients can use WMI C++ interfaces and scripting objects to communicate with network devices that use the SNMP protocol and can receive SNMP traps as WMI events. |

Storage-Replica | Storage Replica | Allows you to replicate data using the Storage Replica feature. |

Telnet-Client | Telnet Client | Telnet Client uses the Telnet protocol to connect to a remote Telnet server and run applications on that server. |

TFTP-Client | TFTP Client | Trivial File Transfer Protocol (TFTP) Client is used to read files from, or write files to, a remote TFTP server. TFTP is primarily used by embedded devices or systems that retrieve firmware, configuration information, or a system image during the boot process from a TFTP server. |

User-Interfaces-Infra | User Interfaces and Infrastructure | This contains the available User Experience and Infrastructure options. |

Server-Gui-Mgmt-Infra | Graphical Management Tools and Infrastructure | Graphical Management Tools and Infrastructure includes infrastructure and a minimal server interface that supports GUI management tools. |

Desktop-Experience | Desktop Experience | Desktop Experience includes features of Windows 8.1, including Windows Search. Windows Search lets you search your device and the Internet from one place. To learn more about Desktop Experience, including how to disable web results from Windows Search, read http://go.microsoft.com/fwlink/?LinkId=390729 |

Server-Gui-Shell | Server Graphical Shell | Server Graphical Shell provides the full Windows graphical user interface for server, including File Explorer and Internet Explorer. Uninstalling the shell reduces the servicing footprint of the installation, while leaving the ability to run local GUI management tools, as part of the minimal server interface. |

FabricShieldedTools | VM Shielding Tools for Fabric Management | Provides Shielded VM utilities that are used by Fabric Management solutions and should be installed on the Fabric Management server. |

Biometric-Framework | Windows Biometric Framework | Windows Biometric Framework (WBF) allows fingerprint devices to be used to identify and verify identities and to sign in to Windows. WBF includes the components required to enable the use of fingerprint devices. |

Windows-Identity-Foundation | Windows Identity Foundation 3.5 | Windows Identity Foundation (WIF) 3.5 is a set of .NET Framework classes that can be used for implementing claims-based identity in your .NET 3.5 and 4.0 applications. WIF 3.5 has been superseded by WIF classes that are provided as part of .NET 4.5. It is recommended that you use .NET 4.5 for supporting claims-based identity in your applications. |

Windows-Internal-Database | Windows Internal Database | Windows Internal Database is a relational data store that can be used only by Windows roles and features, such as Active Directory Rights Management Services, Windows Server Update Services, and Windows System Resource Manager. |

PowerShellRoot | Windows PowerShell | Windows PowerShell enables you to automate local and remote Windows administration. This task-based command-line shell and scripting language is built on the Microsoft .NET Framework. It includes hundreds of built-in commands and lets you write and distribute your own commands and scripts. |

PowerShell | Windows PowerShell 5.0 | Windows PowerShell enables you to automate local and remote Windows administration. This task-based command-line shell and scripting language is built on the Microsoft .NET Framework. It includes hundreds of built-in commands and lets you write and distribute your own commands and scripts. |

PowerShell-V2 | Windows PowerShell 2.0 Engine | Windows PowerShell 2.0 Engine includes the core components from Windows PowerShell 2.0 for backward compatibility with existing Windows PowerShell host applications. |

DSC-Service | Windows PowerShell Desired State Configuration Service | Windows PowerShell Desired State Configuration Service supports configuration management of multiple nodes from a single repository. |

PowerShell-ISE | Windows PowerShell ISE | Windows PowerShell Integrated Scripting Environment (ISE) lets you compose, edit, and debug scripts and run multi-line interactive commands in a graphical environment. Features include IntelliSense, tab completion, snippets, color-coded syntax, line numbering, selective execution, graphical debugging, right-to-left language and Unicode support. |

WindowsPowerShellWebAccess | Windows PowerShell Web Access | Windows PowerShell Web Access lets a server act as a web gateway, through which an organization's users can manage remote computers by running Windows PowerShell sessions in a web browser. After Windows PowerShell Web Access is installed, an administrator completes the gateway configuration in the Web Server (IIS) management console. |

WAS | Windows Process Activation Service | Windows Process Activation Service generalizes the IIS process model, removing the dependency on HTTP. All the features of IIS that were previously available only to HTTP applications are now available to applications hosting Windows Communication Foundation (WCF) services, using non-HTTP protocols. IIS 10.0 also uses Windows Process Activation Service for message-based activation over HTTP. |

WAS-Process-Model | Process Model | Process Model hosts Web and WCF services. Introduced with IIS 6.0, the process model is a new architecture that features rapid failure protection, health monitoring, and recycling. Windows Process Activation Service Process Model removes the dependency on HTTP. |

WAS-NET-Environment | .NET Environment 3.5 | .NET Environment supports managed code activation in the process model. |

WAS-Config-APIs | Configuration APIs | Configuration APIs enable applications that are built using the .NET Framework to configure Windows Process Activation Model programmatically. This lets the application developer automatically configure Windows Process Activation Model settings when the application runs instead of requiring the administrator to manually configure these settings. |

Search-Service | Windows Search Service | Windows Search Service provides fast file searches on a server from clients that are compatible with Windows Search Service. Windows Search Service is intended for desktop search or small file server scenarios, and not for enterprise scenarios. |

Windows-Server-Antimalware-Features | Windows Server Antimalware Features | Windows Server Antimalware helps protect your machine from malware. |

Windows-Server-Antimalware | Windows Server Antimalware | Windows Server Antimalware helps protect your machine from malware. |

Windows-Server-Antimalware-Gui | GUI for Windows Server Antimalware | GUI for Windows Server Antimalware. |

Windows-Server-Backup | Windows Server Backup | Windows Server Backup allows you to back up and recover your operating system, applications and data. You can schedule backups, and protect the entire server or specific volumes. |

Migration | Windows Server Migration Tools | Windows Server Migration Tools includes Windows PowerShell cmdlets that facilitate migration of server roles, operating system settings, files, and shares from computers that are running earlier versions of Windows Server or Windows Server 2012 to computers that are running Windows Server 2012. |

WindowsStorageManagementService | Windows Standards-Based Storage Management | Windows Standards-Based Storage Management provides the ability to discover, manage, and monitor storage devices using management interfaces that conform to the SMI-S standard. This functionality is exposed as a set of Windows Management Instrumentation (WMI) classes and Windows PowerShell cmdlets. |

Windows-TIFF-IFilter | Windows TIFF IFilter | Windows TIFF IFilter (Tagged Image File Format Index Filter) performs OCR (Optical Character Recognition) on TIFF 6.0-compliant files (.TIF and .TIFF extensions) and in that way enables indexing and full text search of those files. |

WinRM-IIS-Ext | WinRM IIS Extension | Windows Remote Management (WinRM) IIS Extension enables a server to receive a management request from a client by using WS-Management. WinRM is the Microsoft implementation of the WS-Management protocol which provides a secure way to communicate with local and remote computers by using Web services. |

WINS | WINS Server | Windows Internet Naming Service (WINS) Server provides a distributed database for registering and querying dynamic mappings of NetBIOS names for computers and groups used on your network. WINS maps NetBIOS names to IP addresses and solves the problems arising from NetBIOS name resolution in routed environments. |

Wireless-Networking | Wireless LAN Service | Wireless LAN (WLAN) Service configures and starts the WLAN AutoConfig service, regardless of whether the computer has any wireless adapters. WLAN AutoConfig enumerates wireless adapters, and manages both wireless connections and the wireless profiles that contain the settings required to configure a wireless client to connect to a wireless network. |

WoW64-Support | WoW64 Support | Includes all of WoW64 to support running 32-bit applications on Server Core installations. This feature is required for full Server installations. Uninstalling WoW64 Support will convert a full Server installation into a Server Core installation. |

XPS-Viewer | XPS Viewer | The XPS Viewer is used to read, set permissions for, and digitally sign XPS documents. |